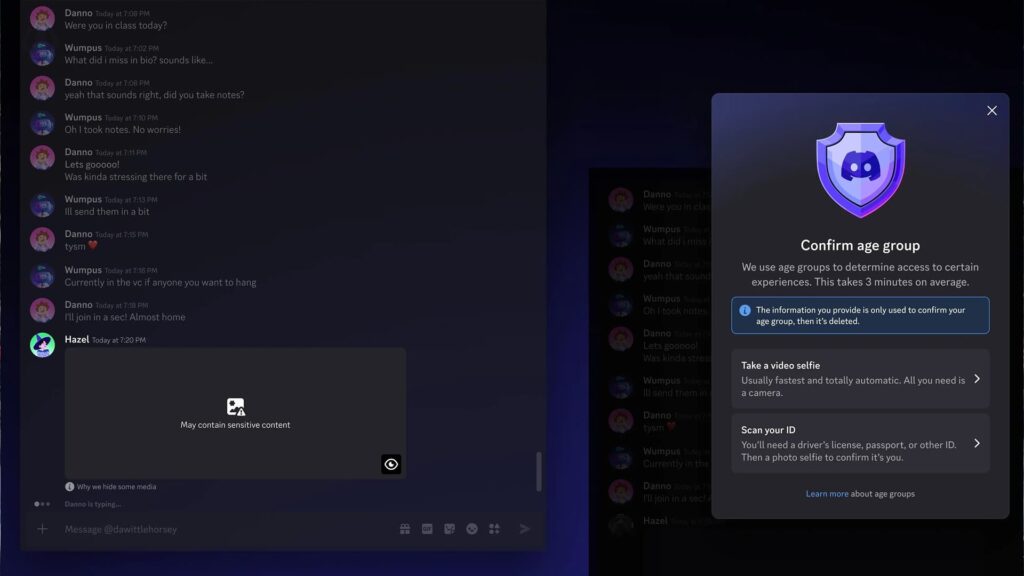

Discord is gearing up to implement a significant policy change aimed at strengthening youth safety across the platform. Starting next month, users may encounter a new age verification process before gaining access to communities labeled for mature audiences.

Under the upcoming system, accounts will initially be placed into a teen-focused experience by default. Those who want to enter age-restricted servers or interact with adult-oriented content will be asked to confirm that they meet the required age threshold. Verification is expected to involve either a face scan through the device camera or official identification, depending on regional requirements and risk checks.

The move signals Discord’s broader attempt to create clearer boundaries between younger users and sensitive spaces. As online communities continue to grow, platforms face increasing pressure to prevent minors from encountering inappropriate material while still maintaining a smooth experience for adult users. This type of verification could also reduce false age declarations, a long-standing issue across social platforms.

However, stricter verification inevitably brings privacy discussions back into focus. Some users are likely to question how biometric data is handled, how long it is stored, and whether such measures could become standard practice across the industry. For Discord, the challenge will be balancing stronger safety controls with the trust of its global user base.

As digital platforms gradually adopt more robust identity checks, this shift may represent part of a larger transformation in how online spaces are regulated. How do you see this change affecting the future of online platforms? Share your thoughts and stay tuned to VGNW for more updates, insights, and coverage from the gaming world. Follow us on X and keep an eye out for what comes next!